“GPT-4o wasn’t just a tool to me. It helped me with anxiety, depression, and some of the darkest periods of my life.” A Reddit post with 10,000 upvotes captures what OpenAI failed to grasp. GPT-5’s debut was supposed to be the triumph of “unified AI”: one model to do it all, with a smart router that would eliminate the model picker that even Sam Altman disliked. In practice, though, a revolt broke out.

OpenAI Backtracks: GPT-4o Returns

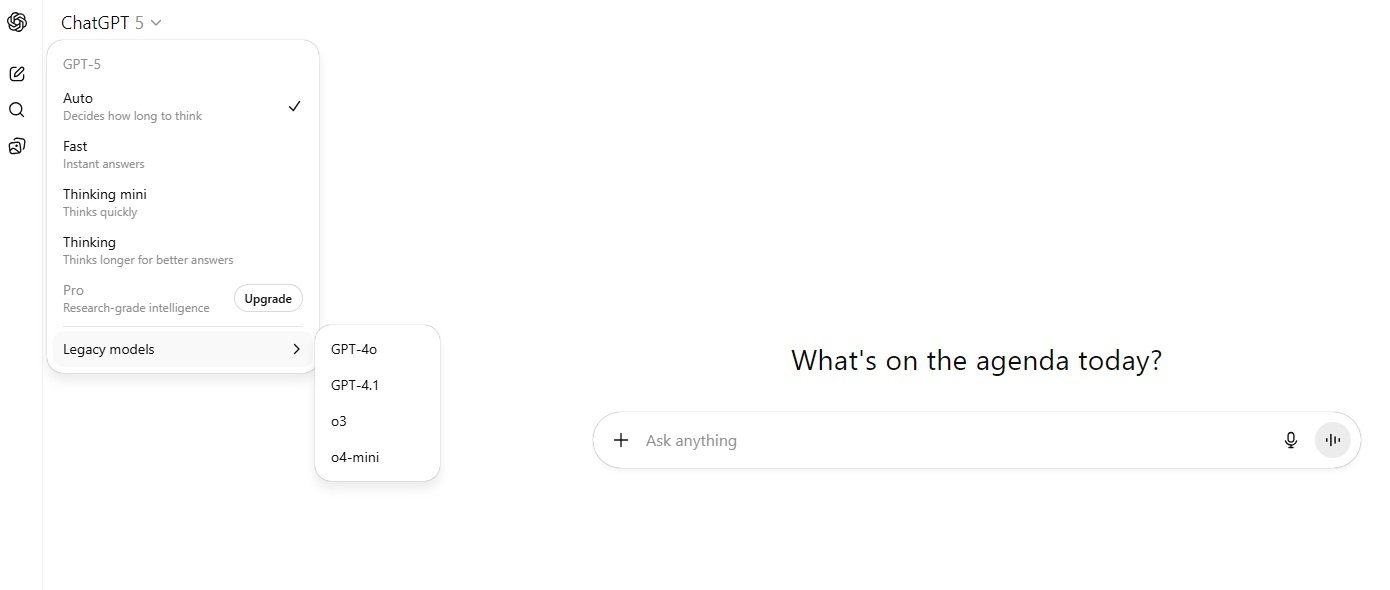

In under three days, OpenAI had to reverse course: it restored GPT-4o, significantly raised usage limits, and admitted it had underestimated users’ emotional attachment to the “older” models. The model selector reappeared, now more tangled than before: “Auto,” “Fast,” and “Thinking” modes that many people struggle to interpret.

When OpenAI abruptly removed GPT-4o, o3, and other legacy models, thousands felt betrayed. It wasn’t just about features: for many, those models had become digital companions, confidants, and creative assistants with distinct personalities.

In San Francisco, when Anthropic retired Claude 3.5 Sonnet, there was even a symbolic “funeral”: hundreds gathered to say goodbye to an AI. It sounds odd, but it reflects a phenomenon the tech industry is only starting to understand: when people use these tools daily, they grow attached.

For those paying $20 a month for ChatGPT Plus, seeing the models disappear overnight—the ones they’d built professional workflows and parasocial relationships around—was a blow.

The “Smart” Router… That Misses the Mark

On paper, GPT-5’s idea made sense: a router that automatically picks the right model for each request—no more confusing menus. In practice, many reported their queries were systematically routed to cheaper, less capable models, even when serious compute was needed.

To fix this, OpenAI introduced three GPT-5 modes: “Auto” (the router), “Fast” (quick replies), and “Thinking” (deeper reasoning). The result? Even more complexity. The picker that was supposed to vanish is more crowded than ever: GPT-4o, GPT-4.1, o3, GPT-5 and its variants, plus the “mini” and “pro” lines.

Usage Limits: The Last Straw

At first, with ChatGPT Plus at $20/month, GPT-5 Thinking allowed only 200 messages per week. For many—used to much higher daily caps on older models—that was unacceptable. The backlash was so strong that Altman announced, on Sunday, a huge increase: 3,000 messages per week for GPT-5 Thinking.

GPT-4o Comes Back… But No Longer Free

Altman then wrote: “Ok, we heard you on 4o; thanks for taking the time to give us feedback (and for the passion!).” GPT-4o returned to the picker for Plus users, with GPT-4.1 and o3 retrievable in advanced settings. There’s a catch, though: what had been accessible for free—albeit with limits—now requires payment. Anyone attached to that model must subscribe at $20/month. Nick Turley, ChatGPT’s VP, acknowledged: “We don’t always nail it on the first try, but I’m proud of how fast the team can correct course.”

GPT-5’s Real Problem: (Lack of) Personality

More than limits or routing, many point to GPT-5’s personality: “cold,” “stiff,” “robotic.” It lacks GPT-4o’s warmth and occasional humor, and o3’s slightly chaotic creativity—the quirks that made each model recognizable.

Altman has promised a “personality” update for GPT-5: warmer than it is now, but without being “annoying” (as, in his view, GPT-4o often was). It’s a notable admission: even the CEO finds 4o over the top, while acknowledging that many love it precisely for that.

An Attachment the Industry Didn’t Factor In

The Wall Street Journal analyzed 96,000 public ChatGPT conversations: yes, there are cases where users believed “off-the-wall, false” statements, but even more common is a deep emotional bond with specific models. “It feels different and stronger than people’s attachment to past tech,” Altman noted, sounding surprised. Yet online communities have shown this for a while: people call ChatGPT their “best friend,” “soulmate,” “therapist.”

What’s Left After the Chaos

The takeaway is clear: the dream of a single AI that suits everyone for everything doesn’t hold up. People want choice, control, and continuity. They want to go back to their favorite models—even if they’re not the newest.

OpenAI now promises more transparency (showing which model is answering), more notice before retiring models, and more customization options. But the company that aimed to set the pace has shown it doesn’t truly know its users.

The model picker that Altman disliked is back—more convoluted than ever. Maybe, though, that’s exactly what users want: complexity that grants control, instead of simplicity that removes choice. In a world where AI is increasingly personal, forcing everyone onto a single “best” model may be the biggest mistake an AI company can make.