ChatGPT is now increasingly revolutionizing the world of work in various areas and in some cases that of daily life, providing valuable support in areas such as content creation, virtual assistance, information search and more. It has been shown that AI outputs are not always error-free, however, with the release of Model 4.0, OpenAI has announced features such as: a 40% decrease in the margin of error, recognition of images as well as just text, increased reasoning ability (at the expense of processing speed, however), and much more.

Let us now analyze with some examples the difference between the 3.5 and 4.0 models of ChatGPT, plus a final example by “feeding” the AI a simple image link.

A Simple Question: a Joke

From this screenshot we can see that the 3.5 model has a more flexible attitude, while the 4.0 model provides an immediate and concise response. However, there is no substantial difference in this case, as the required task is very simple and basic.

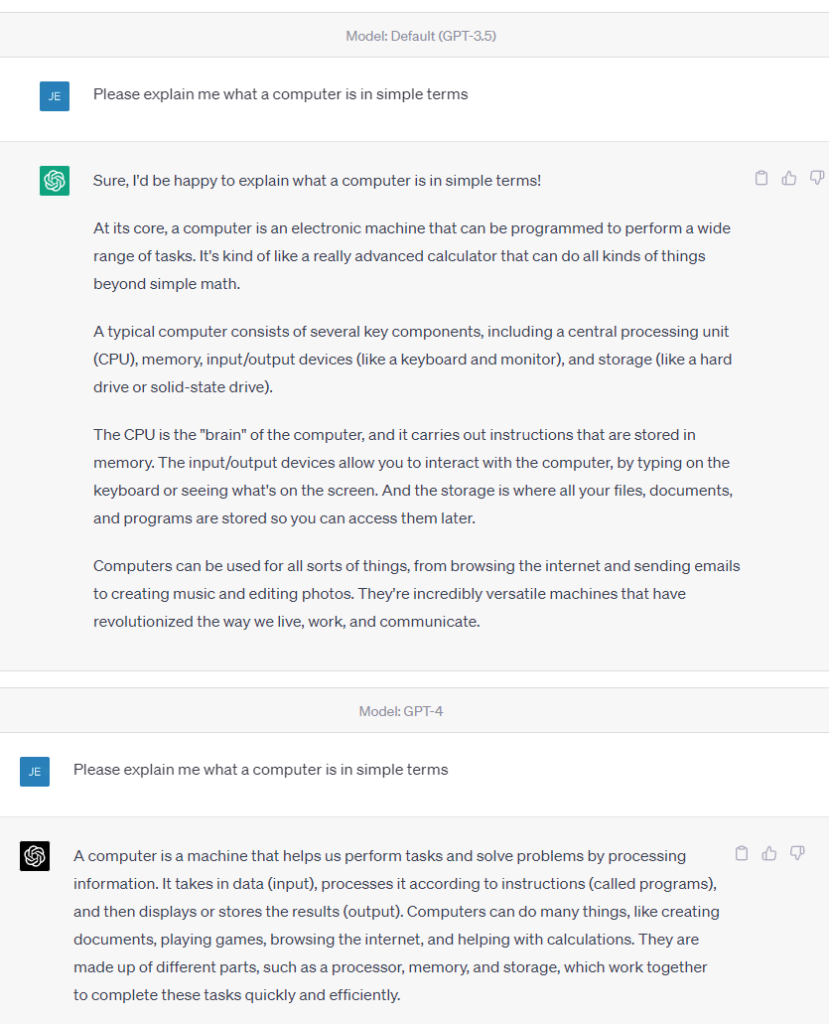

A Technical Question: What is a Computer

In this case we already have a discernible difference between the two answers.

Model 3.5, despite the fact that we asked for an explanation of what was required in “plain language,” went on to analyze the components of a computer and their operation, using even technical terms that perhaps a total neophyte in the field might not understand, despite the fact that he made an effort to explain them in very simple terms that were within everyone’s reach.

The answer provided by Model 4.0 was different: he provided a very concise answer in really basic terms (thus fully complying with our instructions), actually explaining how a computer operates (input, execution, output) without going into too much detail. Just a very brief mention of the components of which a computer consists, without going into specifics.

A Scientific Question about the Sun

Both models recognize the statement we wrote as false, again we see how ChatGPT 3.5 expresses a longer and more flexible answer, limiting it to expressing basic but still correct concepts. Model 4.0, on the other hand, gives a more concise answer, accompanied, however, by more precise data.

Last Test: a Foreign Language and the Description of a Picture

In our last test we use only ChatGPT’s 4.0 model, giving the AI to be analyzed a direct link to an image and formulating the question in French language. The purpose is to test both the wording of the answer in French language and whether the description of the image is correct with respect to what it actually shows.

The syntax and grammar of the answer provided by ChatGPT 4.0 are formally correct as far as the French language is concerned, the problem comes on the description of the image. ChatGPT in fact describes a lush garden, with flowers present in different shades, different types of plants. Too bad that the image we proposed (as you can also view here), shows only two well-flowered sunflowers, the background of which is only a blue sky that is not even too defined.

Once we point out the error to the AI, it also tries to correct it based on the information we provided, this time giving a more consistent description than the image provided.

However, the tone used by the AI to describe something that is blatantly wrong may appear convincing to the less attentive, as it is still formally and grammatically written correctly (as far as the language used is concerned), even this aspect may raise some doubts with respect to the use of this technology to purposely generate fake news. By this we do not want to discredit at all a technology that in any case is proving to be very useful and revolutionary, only to state that its development is constant and currently not error-free. The progress made, in so few months, is there for all to see.

Featured Image by Pexels

Thank you I really did not know ChatGPT would lack on this one. Thank you for this article!