San Francisco-based OpenAI has just launched a rather exciting new AI model: the GPT-4o. This is the latest update to their famous ChatGpt, the chatbot we all know by now for its ability to generate text and images almost as if it were human. The “o” in GPT-4o stands for “omni,” which is Latin for “all” a nod to its ability to work not only with text, but also with images and audio.

During a streamed event, Mira Murati, OpenAI’s chief technology officer, extolled the capabilities of the new model, saying it is “much faster” than its predecessors and greatly improves in handling text, images, and sound.

Speaking of speed, OpenAI shared some impressive figures about GPT-4’s responsiveness when processing audio. Apparently it can respond to audio input in as little as 232 milliseconds, with an average of 320 milliseconds, thus approaching the typical reaction time of a human conversation. Of course, this performance will still need to be put to the test, but it sounds promising.

For a while, ChatGpt has allowed users to listen to AI-generated responses through a virtual voice, but with GPT-4o, it seems to be transforming into something more like a full virtual assistant, almost like talking to a real person. Sam Altman, the CEO of OpenAI, even compared GPT-4o to the artificial intelligence in Spike Jonze’s movie “Her” where the main character ends up falling in love with a system capable of conversing in a completely natural way.

During the recent live streaming, Mira Murati shared some exciting news about the increasingly sophisticated visual capabilities of OpenAI’s artificial intelligence. For example, she illustrated how the new GPT-4o can now translate a picture of a menu written in another language on the fly. And that’s not all: in the future, we may see ChatGpt “watching” a live sporting event and explaining the rules of the game to those watching.

About GPT-4o Features

OpenAI’s new model, the GPT-4o, introduces some rather interesting new features for ChatGpt users. You no longer have to wait for it to finish “talking” to ask a question or interrupt it. This makes the conversation with the AI much smoother and more natural, kind of like chatting with a friend.

Another feature is its ability to pick up emotions in the user’s voice, allowing it to respond in tones more appropriate to the emotional context of the conversation. Initially, however, these advanced features will only be available to a small group of OpenAI business partners, because there is still some concern about how these might be used.

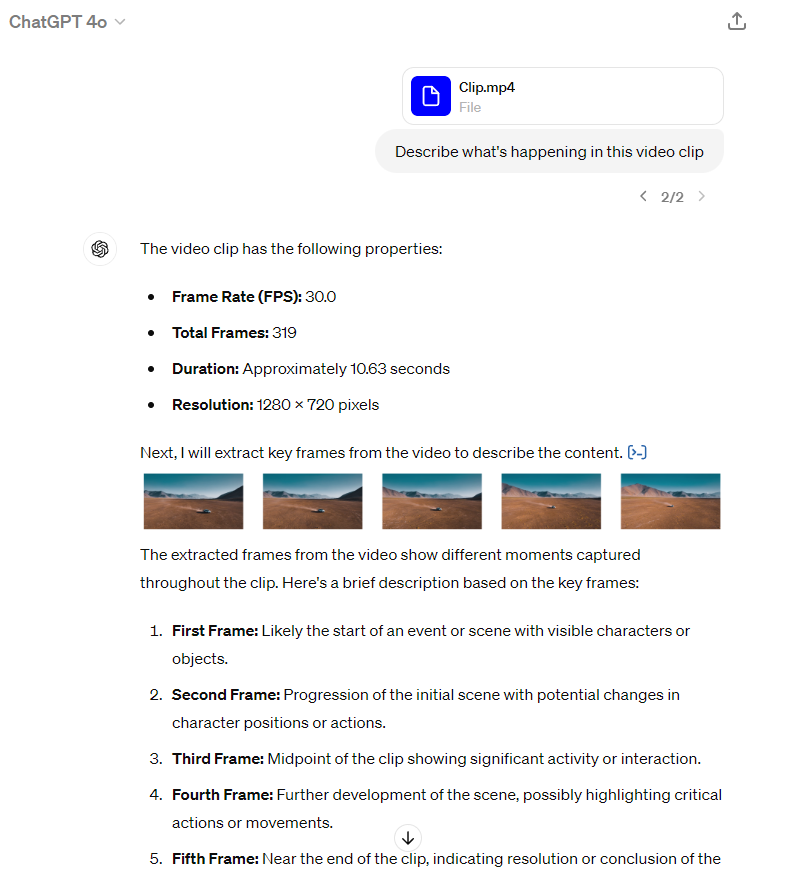

GPT-4o also has a new feature that allows users to upload videos to ChatGpt and ask the AI to describe the images or summarize the content. It is really a step forward in making interactions with AI increasingly rich and engaging.

One piece of news that will please many, especially those who use ChatGpt outside the United States, is that the new GPT-4o is significantly more adept at handling text in languages other than English than its previous version, the GPT-4 Turbo. This is a nice step forward in making the technology more inclusive and globally accessible.

One of the most significant changes is that now the advanced text and image management features of GPT-4o will be available to all ChatGpt users, not just payers. About the Gpt Store: the virtual store that houses artificial intelligences created by “Plus” users, will also soon be open to anyone, even those without a subscription.

Despite these openings, Mira Murati stressed that Plus users will retain certain privileges, such as the ability to make at least five times as many requests to the chatbot as those who use the service for free. Recall that Plus users also have a limit of interactions, set at 40 per three hours.

And there is also good news for developers: the cost of accessing the new model via API is 50 percent lower than for GPT-4 Turbo. This makes GPT-4o an even more attractive choice for those working in application development based on this technology.

About Futures of AI

Sam Altman, the CEO of OpenAI, had already hinted at these innovations in a message on X, denying that it was the much-discussed Gpt-5 or a new AI search engine. Speculation was high, but we now know that the innovations are about refining current technologies.

After the event, Altman reflected on OpenAI’s journey through a post on his blog. He revealed how the company’s original vision, to “create general artificial intelligence that benefits humanity” is evolving. These reflections show a changing landscape and a promise of new technological frontiers opening up.